Automating Data Analysis and Reporting with Ruby

In today’s data-driven world, automation is the linchpin of efficiency. For data analysts and developers, the ability to automate the painstaking tasks of data collection, processing, and report generation can free crucial hours for more high-value, creative work. Ruby, often celebrated for its elegant syntax and object-oriented programming, isn’t just for web development. It also harbors a suite of powerful tools that can be harnessed to streamline data analytics. Understanding how to wield Ruby’s capabilities is a formidable skill in any professional’s arsenal.

The Ruby Perspective on Data Automation

Data automation is more than a convenience—it’s a necessity. With the volume and complexity of data available today, relying on manual processes can lead to errors, inconsistencies, and wasted time. Ruby provides a framework for creating data pipelines that are not only robust but enjoyable to build and maintain.

In this detailed guide, we explore how you, as a data analyst or Ruby developer, can harness Ruby’s full potential to automate mundane data-related tasks. From the complexities of gathering data from various sources to the finesse of generating insightful reports, Ruby truly emerges as a force to be reckoned with in the data automation realm.

Automating Data Gathering

Exploring Ruby Libraries for Extraction

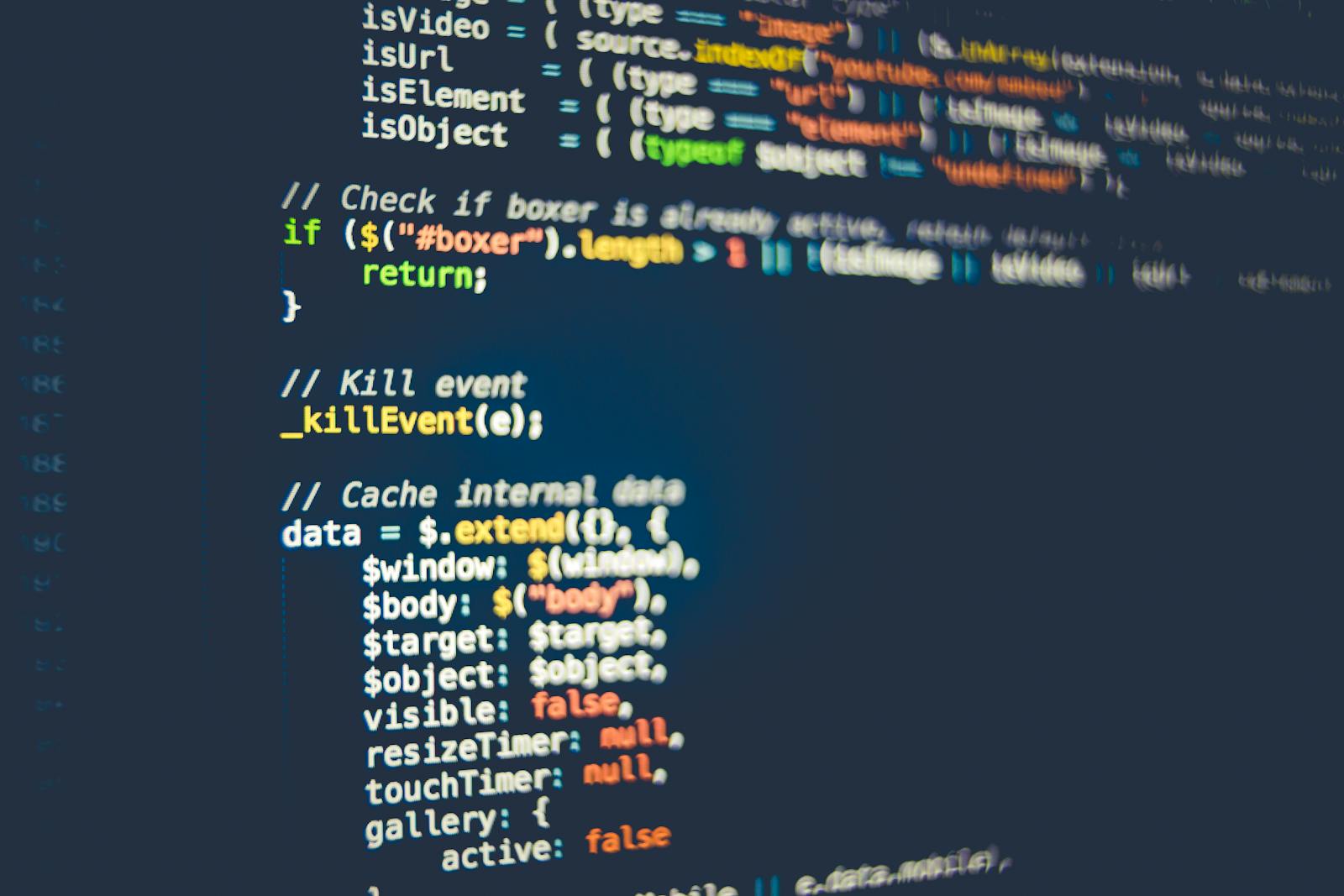

One of Ruby’s strengths lies in the abundance of third-party libraries available. For data extraction, gems like Nokogiri and Mechanize are indispensable. Nokogiri, known for its speed and XML/HTML parsing capabilities, can scrape the web with uncanny precision. Mechanize, a Ruby library that simulates web browsing, is perfect for interactive websites that tend to complicate scraping efforts with forms and buttons.

Streamlining the Collection Process

Ruby’s concurrency model offers an advantage in data collection. The ‘concurrent-ruby’ gem allows multiple resource-intensive tasks to execute simultaneously without the need for complex threading. This means you can fetch data from APIs, scrape the web, and query databases in parallel, significantly reducing the time it takes to gather data.

Processing Data with Ruby

Data Cleaning and Transformation

Ruby’s enumerable methods provide a succinct syntax for data manipulation. Methods like ‘map’, ‘reduce’, and ‘select’ can transform raw data into the format you need, making it clean and ready for analysis. The ‘csv’ library can read and write CSV files effectively, an important step in any data analysis pipeline.

Handling Large Datasets

When dealing with big data, memory efficiency is a concern. Ruby’s ‘CSV.foreach’ can read large CSV files one line at a time, without loading the entire file into memory. For even larger datasets, Ruby’s IO methods and streaming APIs offer a way to process data in manageable chunks without overwhelming the system.

Generating Reports

Report Generation and Customization

The ‘Prawn’ gem is a reliable solution for generating PDF reports in Ruby. It allows for the creation of sophisticated, data-rich documents with intricate layouts. Combining Prawn with ‘Gruff’ or ‘GnuplotRB’ for graph generation, you can produce visually engaging reports directly from Ruby code.

Automating Visualization

In the world of data analytics, visualization is key to understanding. Ruby’s ‘Chartkick’ gem, which integrates seamlessly with popular JavaScript charting libraries, enables dynamic, interactive visualizations. These can feed directly into automated reports, providing a powerful tool for storytelling with data.

Understanding the Benefits of Automation with Ruby

Saving Time and Resources

The primary benefit of automating data analysis with Ruby is the efficiency it offers. By reducing manual effort, analysts can focus on interpretation and strategic insights, while developers can concentrate on building and maintaining the automation processes that do the heavy lifting.

Enhancing Accuracy and Consistency

Human error is an unavoidable aspect of manual data processing. Automation with Ruby eliminates these errors, ensuring that data is processed consistently every time. This is particularly important when dealing with regulatory compliance or the need for precise, error-free results.

Real-World Case Studies and Examples

Finance and Forecasting

A Ruby-based automation system allows a financial analyst to pull in daily stock data from various APIs, process it for trends and anomalies, and generate custom reports that inform critical forecasting decisions. This automation has not only saved significant time but has also improved the accuracy of their financial models.

Customer Insights and Business Intelligence

A retail company leverages Ruby automation to gather data from their customer-facing systems, process it for patterns and preferences, and produce reports that drive targeted sales and marketing campaigns. This responsiveness to customer data has led to improved customer satisfaction and increased sales.

Frequently Asked Questions

How can I get started with Ruby for data analysis?

Getting started with Ruby for data analysis requires a foundational understanding of data types and structures, as well as experience with Ruby’s syntax and common data manipulation techniques. Begin by familiarizing yourself with the various gems available for data processing and visualization, and work through small projects to build your skills.

Is Ruby suitable for handling big data?

While Ruby is not traditionally associated with big data, it can handle large datasets with the right techniques. Employing streaming APIs, memory-efficient reading and writing methods, and a well-optimized approach to data processing can make Ruby competitive even with more well-known big data tools.

How do I maintain and update automated data processes in Ruby?

Documenting your code and being consistent with your naming and organizational conventions will make maintaining Ruby data processes much easier. Regularly reviewing and refactoring your code, testing for edge cases, and keeping abreast of updates to the libraries and gems you use will help ensure your automation remains robust and reliable.

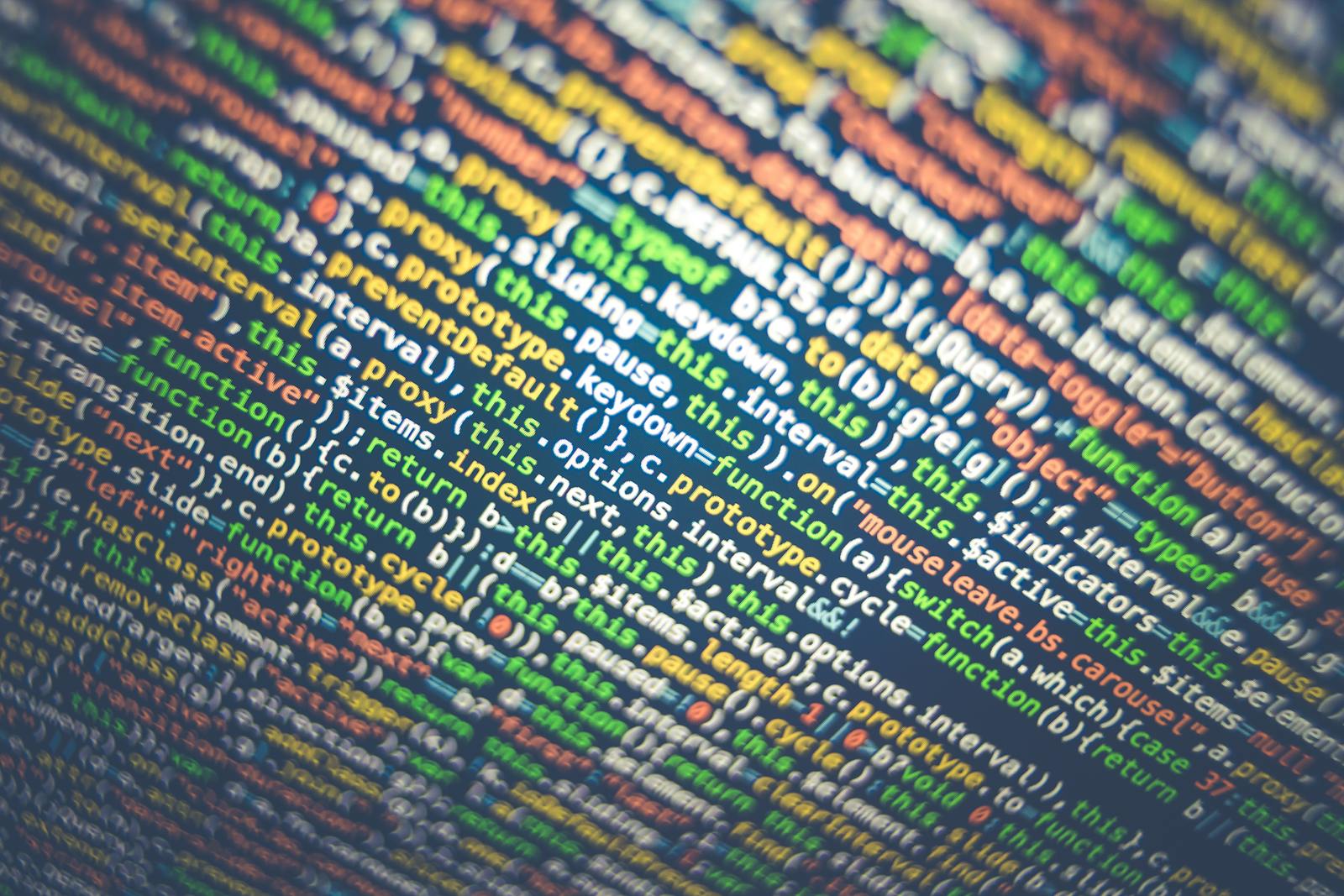

Can I parallelize data processing in Ruby?

Yes, you can. Ruby’s support for concurrent programming and a rich ecosystem of concurrent programming libraries makes parallel data processing feasible. Utilize tools like the ‘parallel’ gem to achieve concurrency and speed up your data processing tasks.

In Conclusion, the Power of Ruby Awaits

Ruby presents a unique opportunity for data savvy professionals to not only automate away the cumbersome, labor-intensive elements of data analysis but to do so with elegance and expressiveness. By adopting Ruby for automating data analysis and reporting, you stand to gain significant efficiencies, improved data integrity, and the ability to produce sophisticated reports and visualizations. It’s time to harness the power of Ruby and transform the way you approach data.

Frequently Asked Questions

Can I use Ruby for data science and machine learning?

Yes, you can. While not as popular as Python or R in the data science community, Ruby has a growing number of libraries and frameworks that support tasks like data cleaning, analysis, and machine learning algorithms. Some popular options include ‘Daru’ for data manipulation, ‘Sciruby’ for scientific computing, and ‘SciRuby-Stats’ for statistical analysis.

How can I improve the performance of my Ruby data processes?

One way to improve performance is by utilizing parallel processing techniques as mentioned in a previous FAQ. Additionally, optimizing your code through techniques like memoization, efficient looping, and avoiding unnecessary object creation can also significantly impact performance. It’s also essential to carefully choose and configure your database solutions and to consider caching where appropriate.

Are there any security concerns with using Ruby for data processing?

As with all programming languages, there is always the potential for vulnerabilities. However, as a mature language with a strong community and active maintenance, Ruby has proven to be relatively secure. It’s essential to follow best practices such as sanitizing input data, using secure libraries, and regularly updating your code and dependencies to mitigate any potential security risks.